Table of Contents

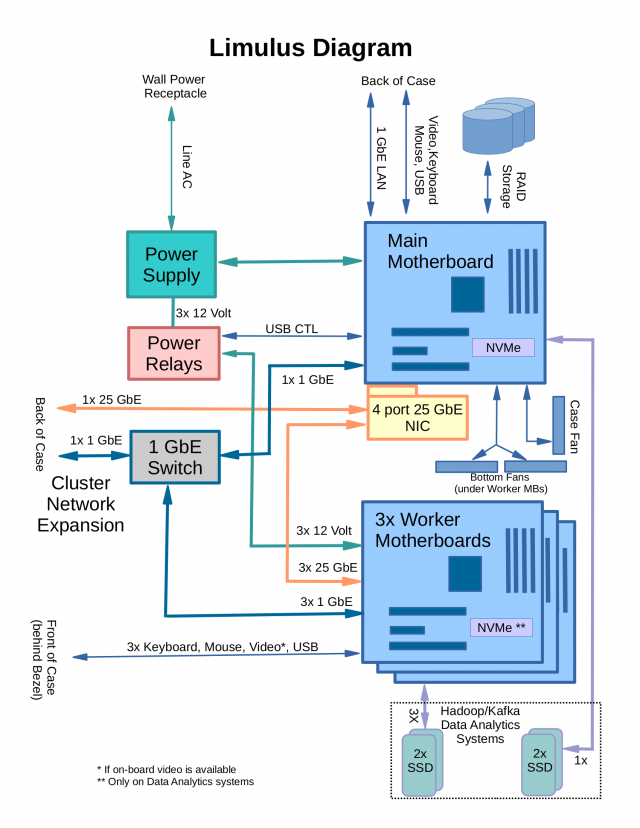

Functional Diagram

The figure illustrates the functional parts that are packaged in a standard Limulus system (either desk-side or rack-mount). The “double-wide” Limulus diagram is forthcoming. It is similar and has eight total motherboards (one main motherboard), two 1-GbE switches, two USB Power Relay boards, and an additional 25-GbE NIC to facilitate the 25-GbE switchless network.

Main Motherboard

This is the main system motherboard. Users log into this motherboard like any other Linux workstation that is connected to the LAN. It is mounted as a standard motherboard in the case and provides all the standard motherboard I/O, video, keyboard, mouse, and sometimes expansion cards. While any wattage of processor can run on this motherboard, the model is usually matched to the worker nodes. Main storage (OS, libraries, user/home, etc.) is placed on an enterprise SSD mounted in the NVMe slot. See RAID storage below for additional options.

Worker Motherboards

The worker motherboards are removable blades (see the blade description). Each of these motherboards has one processor (65 watts), memory, NVMe storage (for Data Analytics Clusters), and sometimes one PCIe card (using a 90 degree “bus bender”) and custom blade panel. Currently, only 25 GbE is supported in this slot.

On HPC systems, the worker motherboards are booted and operate “disk-less” using a RAM disk created and managed by the Warewulf Toolkit.

On Data Analytics Systems (Hadoop, Spark, Kafka), the worker motherboards are booted using the local SSD drive occupying the NVMe slot. These motherboards are installed, maintained, and monitored by the Apache Ambari Management Tool.

All I/O is exposed on the front of the case bezel (or door on the rack-mount systems). Under normal operation, there is no need to access the I/O panel. All network cables and storage cables are connected to the front of the blade and must be removed before the blade is removed.

Twelve-volt (DC) power to the blade comes from a blind connector on the back of the blade. An onboard DC-DC converter is used to provide the needed voltages to drive the motherboard.

Blade design details can be found on the Limulus Compute Blades page.

NVMe Storage

As mentioned, each motherboard provides an NVMe storage option. The main node uses an enterprise-quality NVMe drive of at least 500 GB for all system storage. In general, an NVMe drive is not required on HPC system nodes; however, it can be included to provide local scratch storage on the nodes. On Data Analytics systems, a smaller NVMe is used to host the local OS and Hadoop/Spark/Kafka tools.

RAID Storage

A RAID storage option is available and runs from the main motherboard. Depending on the amount of storage, a RAID1 or RAID6 option is provided. There are six available 3.5-inch spinning disk slots available (12 on the double-wide systems). All RAID is managed in software and depending on the number of drives, additional high speed SATA ports are added using an additional PCI card.

The RAID drives are removable from inside the case (i.e they are inside behind the side door).

Power Supply

Each Limulus system has a single power supply with a power cord (redundant options use two power cords). This power supply converts the AC line signal to the appropriate DC voltages to run the main motherboard and provides a number of 12V rails for video cards. These 12V rails are used to power the blades and the internal 1-GbE switch.

All power supplies are high quality 80+ Gold (or higher) and carry a minimum manufacturer's warranty of 5 years. The power supply wattage is matched to the specific models that are designed to work using standard electric service found in offices, labs, and homes. A redundant power supply option is available.

Power Relays

Twelve-volt power to the worker nodes is controlled by a series of relays (one per motherboard). The relays are under control of the main motherboard through a USB connection. Each worker motherboard blade can be power cycled individually.

On HPC systems, the worker nodes are powered off when the main motherboard starts. Either the user or the resource manager (Slurm) can control motherboard power. Optionally, all motherboards can be configured to start when the main motherboard starts.

On Data Analytics systems, all motherboards start when the main motherboard starts. The Hadoop worker daemons (HDFS and YARN) automatically join the cluster.

LAN Connection

A 1 GbE LAN connection is available on the back of the case. The interface is configured for DHCP and is normally a NIC inserted in the main motherboard. The on-board 1-GbE on the main motherboard is used to connect to the internal cluster network.

1-GbE Switch and Internal Cluster Network

An eight-port 1-GbE switch is housed inside the case. It sits above the power supply and connects all the nodes in the cluster. On the double-wide systems, a second 1-GbE switch is on the opposite side of the case (toward the top rear). There are two ports exposed on the back of the case. One of these ports is used to connect the main motherboard 1-GbE link to the internal switch using a short CAT6 cable. The second open port can be used for expansion or connection to a storage device (e.g. to expose a NAS device to the internal cluster network). The 1-GbE network uses non-routable IP addresses (192.168.0.X) and is used as an internal cluster network.

25-GbE Network

A high-performance 25-GbE network is available as an option on some systems (and on others, it is standard). Similar to the 1-GbE network, the 25-GbE is a non-routable (192.168.1.X) internal network. The “switchless” network is achieved by using a a four port 25-GbE NIC on the main motherboard. Three of the four ports are connected to the worker motherboard. Nominally, the three worker ports are combined with the host to form an Ethernet bridge. In the bridge configuration, the bridge responds and acts like a single IP address. Communication between workers is forwarded to the correct port (“bridged”) through the host. The remaining port on the four port NIC is available for expansion.

If configured, the worker motherboards have a single-port 25 GbE NIC as part of the removable blade. A cable from this port on each worker blade is brought out to the back of the case and connected to the four-port 25 GbE NIC.

On the double-wide Limulus systems, there is a second four-port NIC on one of the additional worker motherboards. This NIC is connected to the other three worker motherboards and added to the bridge by connecting the extra port on each four-port 25-GbE NIC.

Data Analytics SSDs

On Data Analytics (Hadoop/Spark) systems, each node can have up to two SSD drives. These drives are used exclusively for Hadoop storage (HDFS). System software for each node, including all analytics applications, is stored on a local NVMe disk (which is housed in the the M.2 slot on the motherboard).

The Data Analytics drives are removable and are located in the front of the case. Each Limulus system has eight front-facing removable drive slots (double-wide systems have sixteen). Each blade provides two SATA connections that are routed to the removable drive cage. At minimum, each motherboard (including the main motherboard) has one of the drive slots occupied (for a total of four SSDs and eight SSDs for double-wide systems). Users can expand the HDFS storage (using the second drive slot) by adding additional SSD drives (either at time of purchase or in the future).

Cooling Fans

There are seven cooling fans in each case (eleven in the double-wide systems). Four of these fans are on the processor cooler (heat sink). These fans are controlled by the motherboard on which they are housed (i.e. as the processor temperature increases, so does the fan speed).

There are two large fans on the bottom of the case. These fans pull air from underneath the case and push it into the three worker blades. The fans are attached to an auxiliary fan port on the main motherboard using a “Y” splitter. A monitoring daemon running on the main motherboard checks the processor temperature of each blade and reports the highest temperature to a second fan control daemon that controls the auxiliary fan port. If any blade temperature increases, the bottom intake fan speed is increased.

The remaining fan is on the back of the case and runs at constant speed. This fan is used to help move air through the case.