This is an old revision of the document!

Table of Contents

HPC Cluster Monitoring

Ganglia

On HPC systems the popular Ganglia monitoring tool is available. To bring up the Ganglia interface simple enter:

http://localhost/ganglia/

In Firefox (or any other browser that is installed on the system) the default screen is shown below.

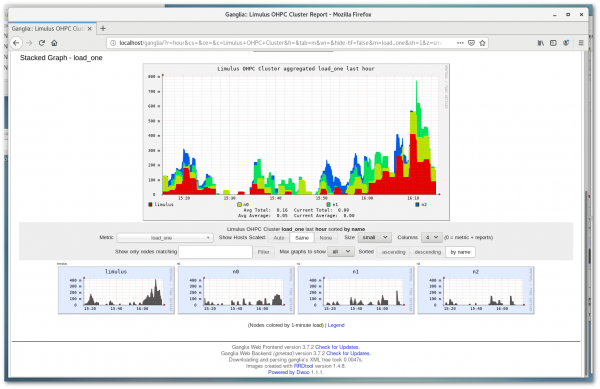

Clicking on the Limulus OHPC Cluster in the Choose a Source drop-down menu will show the individual nodes in the cluster. The load_one (one minute load) is displayed in total and for the individual nodes (shown below).

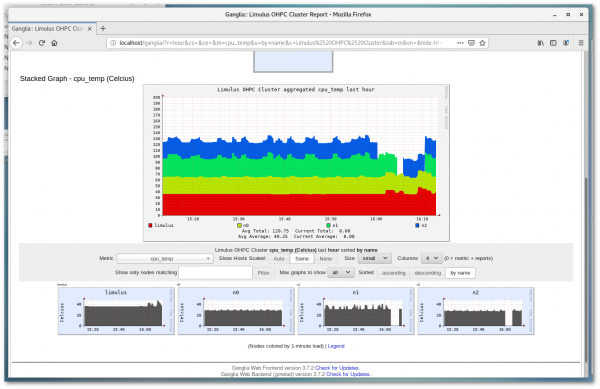

Note that in addition to a myriad of other metrics it is possible to observe the CPU temperatures by selecting cpu_temp (as shown below)

More information on using and configuration can be found at the Ganglia web site

Warewulf Top (wwtop)

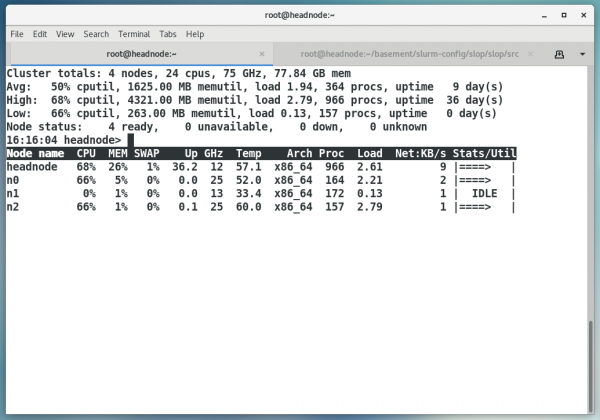

Warewulf Top is a command line tool for monitoring the state of the cluster. Similar to the top command wwtop is part of the Warewulf cluster provisioning and management system used on Limulus HPC systems. wwtop has been augmented to work directly with Limulus systems. Real time CPU temperatures and frequencies are now reported. To run Warewulf Top enter:

$ wwtop

The following screen will update in real time for nodes that are active (booted).

Operation of the wwtop interface is described by the command help option shown below.

USAGE: /usr/bin/wwtop [options]

About:

wwtop is the Warewulf 'top' like monitor. It shows the nodes ordered by

the highest utilization, and important statics about each node and

general summary type data. This is an interactive curses based tool.

Options:

-h, --help Show this banner

-o, --one_pass Perform one pass and halt

Runtime Options:

Filters (can also be used as command line options):

i Display only idle nodes

d Display only dead and non 'Ready' nodes

f Flush any current filters

Commands:

s Sort by: nodename, CPU, memory, network utilization

r Reverse the sort order

p Pause the display

q Quit

Views:

You can use the page up, page down, home and end keys to scroll through

multiple pages.

This tool is part of the Warewulf cluster distribution

http://warewulf.lbl.gov/

In addition to the temperature updates, wwtop now offers a new “one pass” option where a single report for all nodes is sent to the screen. This output is useful for grabbing snapshots of cluster activity. To provide a clean text output (no escape sequences) use the following command:

$ wwtop -o|sed "s,\x1B\[[0-9;]*[a-zA-Z],\n,g" |grep .

A report similar to the following will be written to the screen (or file as directed):

Cluster totals: 4 nodes, 24 cpus, 33 GHz, 77.84 GB mem Avg: 0% cputil, 923.00 MB memutil, load 0.04, 251 procs, uptime 4 day(s) High: 0% cputil, 2856.00 MB memutil, load 0.08, 523 procs, uptime 19 day(s) Low: 0% cputil, 251.00 MB memutil, load 0.00, 148 procs, uptime 0 day(s) Node status: 4 ready, 0 unavailable, 0 down, 0 unknown Node name CPU MEM SWAP Up GHz Temp Arch Proc Load Net:KB/s Stats/Util headnode 0% 17% 0% 19.1 12 38.0 x86_64 523 0.08 22 | | n0 0% 0% 0% 0.2 4 30.0 x86_64 158 0.04 0 | | n1 0% 1% 0% 0.0 13 33.8 x86_64 175 0.04 0 | IDLE | n2 0% 1% 0% 0.0 4 28.0 x86_64 148 0.00 1 | |

Slurm Top (slop)

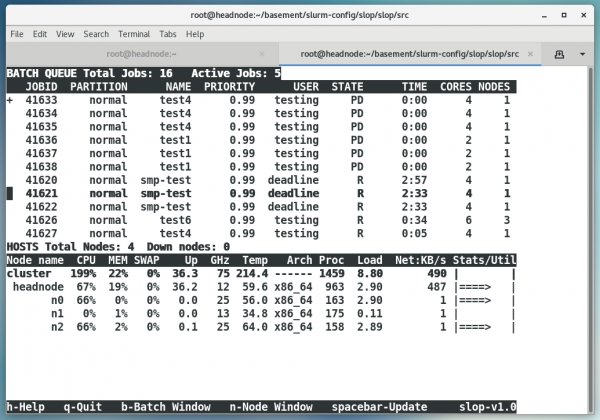

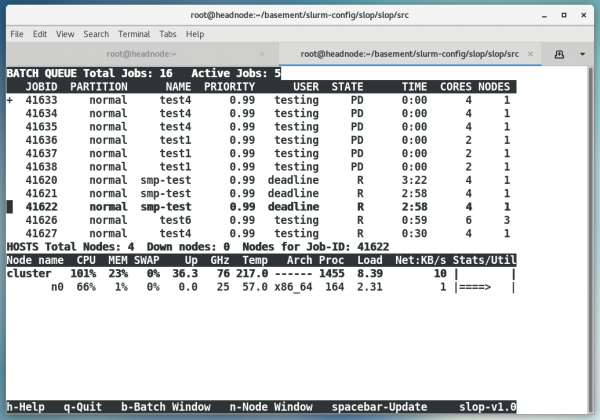

A real-time text-based Slurm “Top like tool” called slop (SLurm tOP) is provided on all HPC Limulus systems. Slop allows the batch queue and node status (similar to wwtop above) to be viewed from a text terminal. The screen will update every 20 seconds, but can be updated at any time by hitting the space bar. And example of the slop interface is shown below.

The above example shows the Slum batch queue in the top pane with job-ID partition, user, etc. The bottom pane displays the cluster nodes metrics similar to wwtop. Pressing the h key will bring up the help menu (as shown below) Note: additional help with Slurm job states is also available.

Slop (SLurm tOP) displays node statistics and the batch queue on a cluster. The top window is the batch queue and the bottom window are the hosts. The windows update automatically and are scrollable with the arrow keys. A "+" indicates that the list will scroll further. Available options: q - to quit userstat h - to get this help b - to make the batch window active n - to make the nodes window active spacebar - update windows (automatic update after 20 seconds) up_arrow - to move though the jobs or nodes window down_arrow - to move though the jobs or nodes window Pg Up/Down - move a whole page in the jobs or nodes window Queue Window Commands: Host Window Commands: j - sort on job-ID s - sort on system hostname u - sort on user name a - redisplay all hosts p - sort on program name return - top snapshot for node a - redisplay all jobs *nodename* means node is down d - delete a job from the queue return - display only the nodes for that job (When sorting on multiple parameters all matches are displayed.) Press 'S' for Slurm Job State Help, or any other key to exit

A useful feature of slop is the the ability to “drill down”“ into job resources. For instance, if the cursor is placed on a job and then “Return” is presses, only those nodes that are used by that particular job are displayed. In the following image, the node used for job 41622 is displayed in the lower pane.

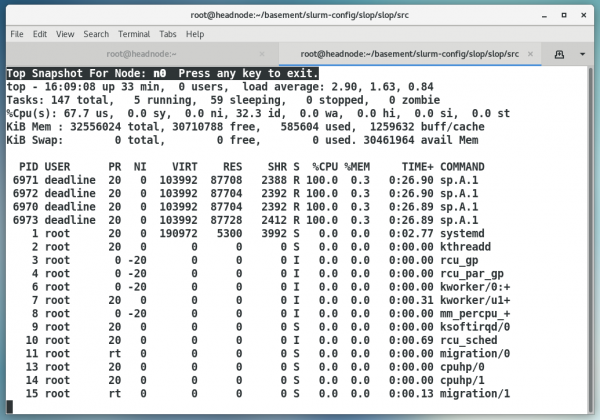

If more specific node information is needed, a standard top snapshot is returned when the cursor is placed

on a node in the lower Host pane (switch to the host pane by entering “n”) and entering “Return.” A top snapshot for node n0 (running job 41622 in the above image) is shown below.

For more information on slop consult the man page.

Data Analytics Cluster Monitoring

Data analytics systems (i.e. Hadoop/Spark/Kafka etc.) are managed by Apache Ambari. Ambari is a web based management tool designed to make manage Hadoop clusters simpler. This includes provisioning, managing, and monitoring Apache Hadoop clusters (that often include tools like Spark and Kafka and others). Ambari provides an intuitive, easy-to-use Hadoop management web UI. An example of the Ambari dashboard is provided below.